File Info

| Exam | AWS DevOps Engineer - Professional |

| Number | DOP-C01 |

| File Name | Amazon.DOP-C01.Dump4Sure.2024-10-27.82q.tqb |

| Size | 858 KB |

| Posted | Oct 27, 2024 |

| Download | Amazon.DOP-C01.Dump4Sure.2024-10-27.82q.tqb |

How to open VCEX & EXAM Files?

Files with VCEX & EXAM extensions can be opened by ProfExam Simulator.

Coupon: MASTEREXAM

With discount: 20%

Demo Questions

Question 1

A Developer is designing a continuous deployment workflow for a new Development team to facilitate the process for source code promotion in AWS. Developers would like to store and promote code for deployment from development to production while maintaining the ability to roll back that deployment if it fails.

Which design will incur the LEAST amount of downtime?

- Create one repository in AWS CodeCommit. Create a development branch to hold merged changes. Use AWS CodeBuild to build and test the code stored in the development branch triggered on a new commit. Merge to the master and deploy to production by using AWS CodeDeploy for a blue/green deployment.

- Create one repository for each Developer in AWS CodeCommit and another repository to hold the production code. Use AWS CodeBuild to merge development and production repositories, and deploy to production by using AWS CodeDeploy for a blue/green deployment.

- Create one repository for development code in AWS CodeCommit and another repository to hold the production code. Use AWS CodeBuild to merge development and production repositories, and deploy to production by using AWS CodeDeploy for a blue/green deployment.

- Create a shared Amazon S3 bucket for the Development team to store their code. Set up an Amazon CloudWatch Events rule to trigger an AWS Lambda function that deploys the code to production by using AWS CodeDeploy for a blue/green deployment.

Correct answer: A

Question 2

A DevOps Engineer discovered a sudden spike in a website's page load times and found that a recent deployment occurred. A brief diff of the related commit shows that the URL for an external API call was altered and the connecting port changed from 80 to 443. The external API has been verified and works outside the application. The application logs show that the connection is now timing out, resulting in multiple retries and eventual failure of the call.

Which debug steps should the Engineer take to determine the root cause of the issue?

- Check the VPC Flow Logs looking for denies originating from Amazon EC2 instances that are part of the web Auto Scaling group. Check the ingress security group rules and routing rules for the VPC.

- Check the existing egress security group rules and network ACLs for the VPC. Also check the application logs being written to Amazon CloudWatch Logs for debug information.

- Check the egress security group rules and network ACLs for the VPC. Also check the VPC flow logs looking for accepts originating from the web Auto Scaling group.

- Check the application logs being written to Amazon CloudWatch Logs for debug information. Check the ingress security group rules and routing rules for the VPC.

Correct answer: C

Explanation:

Question 3

A DevOps Engineer is working on a project that is hosted on Amazon Linux and has failed a security review.

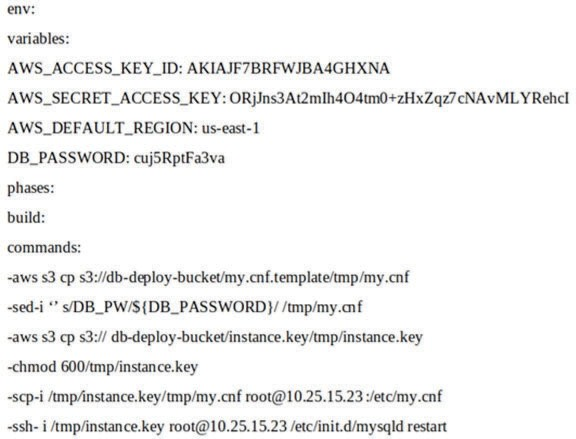

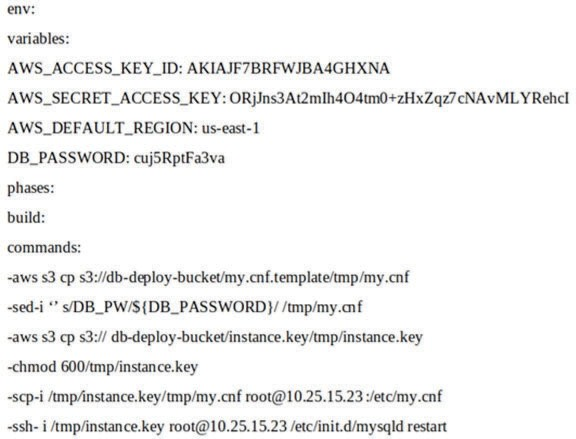

The DevOps Manager has been asked to review the company buildspec.yaml file for an AWS CodeBuild project and provide recommendations. The buildspec.yaml file is configured as follows:

What changes should be recommended to comply with AWS security best practices? (Choose three.)

- Add a post-build command to remove the temporary files from the container before termination to ensure they cannot be seen by other CodeBuild users.

- Update the CodeBuild project role with the necessary permissions and then remove the AWS credentials from the environment variable.

- Store the DB_PASSWORD as a SecureString value in AWS Systems Manager Parameter Store and then remove the DB_PASSWORD from the environment variables.

- Move the environment variables to the ‘db-deploy-bucket’ Amazon S3 bucket, add a prebuild stage to download, then export the variables.

- Use AWS Systems Manager run command versus scp and ssh commands directly to the instance.

- Scramble the environment variables using XOR followed by Base64, add a section to install, and then run XOR and Base64 to the build phase.

Correct answer: BCE

Explanation:

https://docs.aws.amazon.com/systems-manager/latest/userguide/sysman-paramstore-console.html https://docs.aws.amazon.com/systems-manager/latest/userguide/sysman-paramstore-console.html

Question 4

A DevOps Engineer administers an application that manages video files for a video production company. The application runs on Amazon EC2 instances behind an ELB Application Load Balancer. The instances run in an Auto Scaling group across multiple Availability Zones. Data is stored in an Amazon RDS PostgreSQL Multi-AZ DB instance, and the video files are stored in an Amazon S3 bucket. On a typical day, 50 GB of new video are added to the S3 bucket. The Engineer must implement a multi-region disaster recovery plan with the least data loss and the lowest recovery times. The current application infrastructure is already described using AWS CloudFormation.

Which deployment option should the Engineer choose to meet the uptime and recovery objectives for the system?

- Launch the application from the CloudFormation template in the second region, which sets the capacity of the Auto Scaling group to 1. Create an Amazon RDS read replica in the second region. In the second region, enable cross-region replication between the original S3 bucket and a new S3 bucket. To fail over, promote the read replica as master. Update the CloudFormation stack and increase the capacity of the Auto Scaling group.

- Launch the application from the CloudFormation template in the second region, which sets the capacity of the Auto Scaling group to 1. Create a scheduled task to take daily Amazon RDS cross-region snapshots to the second region. In the second region, enable cross-region replication between the original S3 bucket and Amazon Glacier. In a disaster, launch a new application stack in the second region and restore the database from the most recent snapshot.

- Launch the application from the CloudFormation template in the second region, which sets the capacity of the Auto Scaling group to 1. Use Amazon CloudWatch Events to schedule a nightly task to take a snapshot of the database, copy the snapshot to the second region, and replace the DB instance in the second region from the snapshot. In the second region, enable cross-region replication between the original S3 bucket and a new S3 bucket. To fail over, increase the capacity of the Auto Scaling group.

- Use Amazon CloudWatch Events to schedule a nightly task to take a snapshot of the database and copy the snapshot to the second region. Create an AWS Lambda function that copies each object to a new S3 bucket in the second region in response to S3 event notifications. In the second region, launch the application from the CloudFormation template and restore the database from the most recent snapshot.

Correct answer: A

Explanation:

https://aws.amazon.com/about-aws/whats-new/2016/06/amazon-rds-for-postgresql-now-supports-cross-region-read-replicas/ https://aws.amazon.com/about-aws/whats-new/2016/06/amazon-rds-for-postgresql-now-supports-cross-region-read-replicas/

Question 5

A government agency is storing highly confidential files in an encrypted Amazon S3 bucket. The agency has configured federated access and has allowed only a particular on-premises Active Directory user group to access this bucket.

The agency wants to maintain audit records and automatically detect and revert any accidental changes administrators make to the IAM policies used for providing this restricted federated access.

Which of the following options provide the FASTEST way to meet these requirements?

- Configure an Amazon CloudWatch Events Event Bus on an AWS CloudTrail API for triggering the AWS Lambda function that detects and reverts the change.

- Configure an AWS Config rule to detect the configuration change and execute an AWS Lambda function to revert the change.

- Schedule an AWS Lambda function that will scan the IAM policy attached to the federated access role for detecting and reverting any changes.

- Restrict administrators in the on-premises Active Directory from changing the IAM policies.

Correct answer: B

Explanation:

https://docs.aws.amazon.com/AmazonCloudWatch/latest/events/Create-CloudWatch-Events-CloudTrail-Rule.html https://docs.aws.amazon.com/AmazonCloudWatch/latest/events/Create-CloudWatch-Events-CloudTrail-Rule.html

Question 6

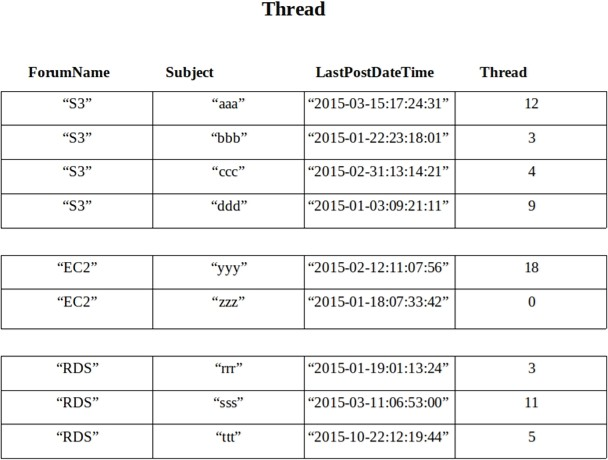

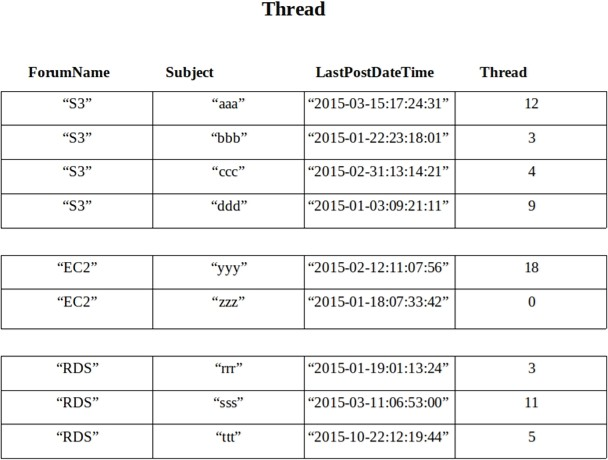

A company wants to use Amazon DynamoDB for maintaining metadata on its forums. See the sample data set in the image below.

A DevOps Engineer is required to define the table schema with the partition key, the sort key, the local secondary index, projected attributes, and fetch operations. The schema should support the following example searches using the least provisioned read capacity units to minimize cost.

- Search within ForumName for items where the subject starts with ‘a’.

- Search forums within the given LastPostDateTime time frame.

- Return the thread value where LastPostDateTime is within the last three months.

Which schema meets the requirements?

- Use Subject as the primary key and ForumName as the sort key. Have LSI with LastPostDateTime as the sort key and fetch operations for thread.

- Use ForumName as the primary key and Subject as the sort key. Have LSI with LastPostDateTime as the sort key and the projected attribute thread.

- Use ForumName as the primary key and Subject as the sort key. Have LSI with Thread as the sort key and the projected attribute LastPostDateTime.

- Use Subject as the primary key and ForumName as the sort key. Have LSI with Thread as the sort key and fetch operations for LastPostDateTime.

Correct answer: B

Explanation:

https://aws.amazon.com/blogs/database/using-sort-keys-to-organize-data-in-amazon-dynamodb/ https://aws.amazon.com/blogs/database/using-sort-keys-to-organize-data-in-amazon-dynamodb/

Question 7

A business has an application that consists of five independent AWS Lambda functions.

The DevOps Engineer has built a CI/CD pipeline using AWS CodePipeline and AWS CodeBuild that builds, tests, packages, and deploys each Lambda function in sequence. The pipeline uses an Amazon CloudWatch Events rule to ensure the pipeline execution starts as quickly as possible after a change is made to the application source code.

After working with the pipeline for a few months, the DevOps Engineer has noticed the pipeline takes too long to complete.

What should the DevOps Engineer implement to BEST improve the speed of the pipeline?

- Modify the CodeBuild projects within the pipeline to use a compute type with more available network throughput.

- Create a custom CodeBuild execution environment that includes a symmetric multiprocessing configuration to run the builds in parallel.

- Modify the CodePipeline configuration to execute actions for each Lambda function in parallel by specifying the same runOrder.

- Modify each CodeBuild project to run within a VPC and use dedicated instances to increase throughput.

Correct answer: C

Explanation:

https://docs.aws.amazon.com/codepipeline/latest/userguide/reference-pipeline-structure.html https://docs.aws.amazon.com/codepipeline/latest/userguide/reference-pipeline-structure.html

Question 8

A company uses a complex system that consists of networking, IAM policies, and multiple three-tier applications. Requirements are still being defined for a new system, so the number of AWS components present in the final design is not known. The DevOps Engineer needs to begin defining AWS resources using AWS CloudFormation to automate and version-control the new infrastructure.

What is the best practice for using CloudFormation to create new environments?

- Manually construct the networking layer using Amazon VPC and then define all other resources using CloudFormation.

- Create a single template to encompass all resources that are required for the system so there is only one template to version-control.

- Create multiple separate templates for each logical part of the system, use cross-stack references in CloudFormation, and maintain several templates in version control.

- Create many separate templates for each logical part of the system, and provide the outputs from one to the next using an Amazon EC2 instance running SDK for granular control.

Correct answer: C

Explanation:

https://docs.aws.amazon.com/AWSCloudFormation/latest/UserGuide/walkthrough-crossstackref.html https://docs.aws.amazon.com/AWSCloudFormation/latest/UserGuide/walkthrough-crossstackref.html

Question 9

A DevOps Engineer is deploying a new web application. The company chooses AWS Elastic Beanstalk for deploying and managing the web application, and Amazon RDS MySQL to handle persistent data. The company requires that new deployments have minimal impact if they fail. The application resources must be at full capacity during deployment, and rolling back a deployment must also be possible.

Which deployment sequence will meet these requirements?

- Deploy the application using Elastic Beanstalk and connect to an external RDS MySQL instance using Elastic Beanstalk environment properties. Use Elastic Beanstalk features for a blue/green deployment to deploy the new release to a separate environment, and then swap the CNAME in the two environments to redirect traffic to the new version.

- Deploy the application using Elastic Beanstalk, and include RDS MySQL as part of the environment. Use default Elastic Beanstalk behavior to deploy changes to the application, and let rolling updates deploy changes to the application.

- Deploy the application using Elastic Beanstalk, and include RDS MySQL as part of the environment. Use Elastic Beanstalk immutable updates for application deployments.

- Deploy the application using Elastic Beanstalk, and connect to an external RDS MySQL instance using Elastic Beanstalk environment properties. Use Elastic Beanstalk immutable updates for application deployments.

Correct answer: D

Explanation:

https://docs.aws.amazon.com/elasticbeanstalk/latest/dg/using-features.deploy-existing-version.html https://docs.aws.amazon.com/elasticbeanstalk/latest/dg/using-features.deploy-existing-version.html

Question 10

A company is implementing an Amazon ECS cluster to run its workload. The company architecture will run multiple ECS services on the cluster, with an Application Load Balancer on the front end, using multiple target groups to route traffic. The Application Development team has been struggling to collect logs that must be collected and sent to an Amazon S3 bucket for near-real time analysis

What must the DevOps Engineer configure in the deployment to meet these requirements? (Choose three.)

- Install the Amazon CloudWatch Logs logging agent on the ECS instances. Change the logging driver in the ECS task definition to 'awslogs'.

- Download the Amazon CloudWatch Logs container instance from AWS and configure it as a task. Update the application service definitions to include the logging task.

- Use Amazon CloudWatch Events to schedule an AWS Lambda function that will run every 60 seconds running the create-export -task CloudWatch Logs command, then point the output to the logging S3 bucket.

- Enable access logging on the Application Load Balancer, then point it directly to the S3 logging bucket.

- Enable access logging on the target groups that are used by the ECS services, then point it directly to the S3 logging bucket.

- Create an Amazon Kinesis Data Firehose with a destination of the S3 logging bucket, then create an Amazon CloudWatch Logs subscription filter for Kinesis.

Correct answer: ADF