File Info

| Exam | Administering Relational Databases on Microsoft Azure |

| Number | DP-300 |

| File Name | Microsoft.DP-300.Dump4Pass.2024-11-30.167q.tqb |

| Size | 11 MB |

| Posted | Nov 30, 2024 |

| Download | Microsoft.DP-300.Dump4Pass.2024-11-30.167q.tqb |

How to open VCEX & EXAM Files?

Files with VCEX & EXAM extensions can be opened by ProfExam Simulator.

Coupon: MASTEREXAM

With discount: 20%

Demo Questions

Question 1

What should you use to migrate the PostgreSQL database?

- Azure DataBox

- AzCopy

- Azure Database MigrationService

- Azure SiteRecovery

Correct answer: C

Explanation:

Reference: https://docs.microsoft.com/en-us/azure/dms/dms-overview Reference:

https://docs.microsoft.com/en-us/azure/dms/dms-overview

Question 2

You are planning the migration of the SERVER1 databases. The solution must meet the business requirements.

What should you include in the migration plan? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Correct answer: To work with this question, an Exam Simulator is required.

Explanation:

Azure Database Migration service Box 1: Premium 4-VCore Scenario: Migrate the SERVER1 databases to the Azure SQL Database platform. Minimize downtime during the migration of the SERVER1 databases. Premimum 4-vCore is for large or business critical workloads. It supports online migrations, offline migrations, and faster migration speeds. Incorrect Answers: The Standard pricing tier suits most small- to medium- business workloads, but it supports offline migration only. Box 2: A VPN gateway YouneedtocreateaMicrosoftAzureVirtualNetworkfortheAzureDatabaseMigrationServicebyusingtheAzureResourceManagerdeploymentmodel,which provides site-to-site connectivity to your on-premises source servers by using either ExpressRoute orVPN. Reference: https://azure.microsoft.com/pricing/details/database-migration/ https://docs.microsoft.com/en-us/azure/dms/tutorial-sql-server-azure-sql-onlinecorrected Azure Database Migration service

Box 1: Premium 4-VCore

Scenario: Migrate the SERVER1 databases to the Azure SQL Database platform.

Minimize downtime during the migration of the SERVER1 databases.

Premimum 4-vCore is for large or business critical workloads. It supports online migrations, offline migrations, and faster migration speeds.

Incorrect Answers:

The Standard pricing tier suits most small- to medium- business workloads, but it supports offline migration only.

Box 2: A VPN gateway

YouneedtocreateaMicrosoftAzureVirtualNetworkfortheAzureDatabaseMigrationServicebyusingtheAzureResourceManagerdeploymentmodel,which provides site-to-site connectivity to your on-premises source servers by using either ExpressRoute orVPN.

Reference:

https://azure.microsoft.com/pricing/details/database-migration/

https://docs.microsoft.com/en-us/azure/dms/tutorial-sql-server-azure-sql-onlinecorrected

Question 3

You need to recommend the appropriate purchasing model and deployment option for the 30 new databases. The solution must meet the technical requirements and the business requirements.

What should you recommend? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Correct answer: To work with this question, an Exam Simulator is required.

Explanation:

Box 1: DTU Scenario: The 30 new databases must scale automatically. Once all requirements are met, minimize costs whenever possible. You can configure resources for the pool based either on the DTU-based purchasing model or the vCore-based purchasing model. In short, for simplicity, the DTU model has an advantage. Plus, if you’re just getting started with Azure SQL Database, the DTU model offers more options at the lower end of performance, so you can get started at a lower price point than with vCore. Box 2: An Azure SQL database elastic pool Azure SQL Database elastic pools are a simple, cost-effective solution for managing and scaling multiple databases that have varying and unpredictable usage demands. The databases in an elastic pool are on a single server and share a set number of resources at a set price. Elastic pools in Azure SQL Database enable SaaS developers to optimize the price performance for a group of databases within a prescribed budget while delivering performance elasticity for each database. Reference: https://docs.microsoft.com/en-us/azure/azure-sql/database/elastic-pool-overview https://docs.microsoft.com/en-us/azure/azure-sql/database/reserved-capacity-overviewconfirmed Box 1: DTU

Scenario:

The 30 new databases must scale automatically.

Once all requirements are met, minimize costs whenever possible.

You can configure resources for the pool based either on the DTU-based purchasing model or the vCore-based purchasing model.

In short, for simplicity, the DTU model has an advantage. Plus, if you’re just getting started with Azure SQL Database, the DTU model offers more options at the lower end of performance, so you can get started at a lower price point than with vCore.

Box 2: An Azure SQL database elastic pool

Azure SQL Database elastic pools are a simple, cost-effective solution for managing and scaling multiple databases that have varying and unpredictable usage demands. The databases in an elastic pool are on a single server and share a set number of resources at a set price. Elastic pools in Azure SQL Database enable SaaS developers to optimize the price performance for a group of databases within a prescribed budget while delivering performance elasticity for each database.

Reference:

https://docs.microsoft.com/en-us/azure/azure-sql/database/elastic-pool-overview

https://docs.microsoft.com/en-us/azure/azure-sql/database/reserved-capacity-overviewconfirmed

Question 4

You need to design a data retention solution for the Twitter feed data records. The solution must meet the customer sentiment analytics requirements.

Which Azure Storage functionality should you include in the solution?

- time-basedretention

- changefeed

- lifecyclemanagement

- softdelete

Correct answer: C

Explanation:

The lifecycle management policy lets you: Delete blobs, blob versions, and blob snapshots at the end of their lifecycles Scenario: Purge Twitter feed data records that are older than two years. Store Twitter feeds in Azure Storage by using Event Hubs Capture. The feeds will be converted into Parquet files. Minimize administrative effort to maintain the Twitter feed data records. Incorrect Answers: A: Time-based retention policy support: Users can set policies to store data for a specified interval. When a time-based retention policy is set, blobs can be created and read, but not modified or deleted. After the retention period has expired, blobs can be deleted but not overwritten. Reference: https://docs.microsoft.com/en-us/azure/storage/blobs/storage-lifecycle-management-concepts The lifecycle management policy lets you:

Delete blobs, blob versions, and blob snapshots at the end of their lifecycles

Scenario:

- Purge Twitter feed data records that are older than two years.

- Store Twitter feeds in Azure Storage by using Event Hubs Capture. The feeds will be converted into Parquet files.

- Minimize administrative effort to maintain the Twitter feed data records.

Incorrect Answers:

A: Time-based retention policy support: Users can set policies to store data for a specified interval. When a time-based retention policy is set, blobs can be created and read, but not modified or deleted. After the retention period has expired, blobs can be deleted but not overwritten.

Reference:

https://docs.microsoft.com/en-us/azure/storage/blobs/storage-lifecycle-management-concepts

Question 5

You need to implement the surrogate key for the retail store table. The solution must meet the sales transaction dataset requirements. What should you create?

- a table that has a FOREIGN KEYconstraint

- a table the has an IDENTITYproperty

- a user-defined SEQUENCEobject

- a system-versioned temporaltable

Correct answer: B

Explanation:

Scenario: Contoso requirements for the sales transaction dataset include: Implement a surrogate key to account for changes to the retail store addresses. A surrogate key on a table is a column with a unique identifier for each row. The key is not generated from the table data. Data modelers like to create surrogate keysontheirtableswhentheydesigndatawarehousemodels.YoucanusetheIDENTITYpropertytoachievethisgoalsimplyandeffectivelywithoutaffectingload performance. Reference: https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/sql-data-warehouse-tables-identity Scenario: Contoso requirements for the sales transaction dataset include:

Implement a surrogate key to account for changes to the retail store addresses.

A surrogate key on a table is a column with a unique identifier for each row. The key is not generated from the table data. Data modelers like to create surrogate

keysontheirtableswhentheydesigndatawarehousemodels.YoucanusetheIDENTITYpropertytoachievethisgoalsimplyandeffectivelywithoutaffectingload performance.

Reference:

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/sql-data-warehouse-tables-identity

Question 6

You need to design an analytical storage solution for the transactional data. The solution must meet the sales transaction dataset requirements. What should you include in the solution? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Correct answer: To work with this question, an Exam Simulator is required.

Explanation:

Box 1: Hash Scenario: Ensure that queries joining and filtering sales transaction records based on product ID complete as quickly as possible. A hash distributed table can deliver the highest query performance for joins and aggregations on large tables. Box 2: Round-robin Scenario: You plan to create a promotional table that will contain a promotion ID. The promotion ID will be associated to a specific product. The product will be identified by a product ID. The table will be approximately 5 GB. A round-robin table is the most straightforward table to create and delivers fast performance when used as a staging table for loads. These are some scenarios where you should choose Round robin distribution: When you cannot identify a single key to distribute your data. If your data doesn’t frequently join with data from other tables. When there are no obvious keys to join. Incorrect Answers: Replicated: Replicated tables eliminate the need to transfer data across compute nodes by replicating a full copy of the data of the specified table to each compute node. The best candidates for replicated tables are tables with sizes less than 2 GB compressed and small dimension tables. Reference: https://rajanieshkaushikk.com/2020/09/09/how-to-choose-right-data-distribution-strategy-for-azure-synapse/ Box 1:

Hash

Scenario:

Ensure that queries joining and filtering sales transaction records based on product ID complete as quickly as possible. A hash distributed table can deliver the highest query performance for joins and aggregations on large tables.

Box 2: Round-robin

Scenario:

You plan to create a promotional table that will contain a promotion ID. The promotion ID will be associated to a specific product. The product will be identified by a product ID. The table will be approximately 5 GB.

A round-robin table is the most straightforward table to create and delivers fast performance when used as a staging table for loads. These are some scenarios where you should choose Round robin distribution:

- When you cannot identify a single key to distribute your data.

- If your data doesn’t frequently join with data from other tables.

- When there are no obvious keys to join.

Incorrect Answers:

Replicated: Replicated tables eliminate the need to transfer data across compute nodes by replicating a full copy of the data of the specified table to each compute node. The best candidates for replicated tables are tables with sizes less than 2 GB compressed and small dimension tables.

Reference:

https://rajanieshkaushikk.com/2020/09/09/how-to-choose-right-data-distribution-strategy-for-azure-synapse/

Question 7

You have 20 Azure SQL databases provisioned by using the vCore purchasing model. You plan to create an Azure SQL Database elastic pool and add the 20 databases.

Which three metrics should you use to size the elastic pool to meet the demands of your workload? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

- total size of all thedatabases

- geo-replicationsupport

- number of concurrently peaking databases * peak CPU utilization perdatabase

- maximum number of concurrent sessions for all the databases

- total number of databases * average CPU utilization perdatabase

Correct answer: ACE

Explanation:

CE: Estimate the vCores needed for the pool as follows: For vCore-based purchasing model: MAX(<Total number of DBs X average vCore utilization per DB>, <Number of concurrently peaking DBs X Peak vCore utilization per DB) A: Estimate the storage space needed for the pool by adding the number of bytes needed for all the databases in the pool. Reference: https://docs.microsoft.com/en-us/azure/azure-sql/database/elastic-pool-overviewconfirmed CE: Estimate the vCores needed for the pool as follows:

For vCore-based purchasing model: MAX(<Total number of DBs X average vCore utilization per DB>, <Number of concurrently peaking DBs X Peak vCore utilization per DB)

A: Estimate the storage space needed for the pool by adding the number of bytes needed for all the databases in the pool.

Reference:

https://docs.microsoft.com/en-us/azure/azure-sql/database/elastic-pool-overviewconfirmed

Question 8

You have SQL Server 2019 on an Azure virtual machine that contains an SSISDB database. A recent failure causes the master database to be lost.

You discover that all Microsoft SQL Server integration Services (SSIS) packages fail to run on the virtual machine.

Which four actions should you perform in sequence to resolve the issue? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct.

Correct answer: To work with this question, an Exam Simulator is required.

Explanation:

Step 1: Attach the SSISDB database Step 2: Turn on the TRUSTWORTHY property and the CLR property If you are restoring the SSISDB database to an SQL Server instance where the SSISDB catalog was never created, enable common language runtime (clr) Step 3: Open the master key for the SSISDB database Restore the master key by this method if you have the original password that was used to create SSISDB. open master key decryption by password = 'LS1Setup!' --'Password used when creating SSISDB' Alter Master Key Add encryption by Service Master Key Step 4: Encrypt a copy of the mater key by using the service master key Reference: https://docs.microsoft.com/en-us/sql/integration-services/backup-restore-and-move-the-ssis-catalog Step 1: Attach the SSISDB database

Step 2: Turn on the TRUSTWORTHY property and the CLR property

If you are restoring the SSISDB database to an SQL Server instance where the SSISDB catalog was never created, enable common language runtime (clr)

Step 3: Open the master key for the SSISDB database

Restore the master key by this method if you have the original password that was used to create SSISDB.

open master key decryption by password = 'LS1Setup!' --'Password used when creating SSISDB'

Alter Master Key Add encryption by Service Master Key

Step 4: Encrypt a copy of the mater key by using the service master key

Reference:

https://docs.microsoft.com/en-us/sql/integration-services/backup-restore-and-move-the-ssis-catalog

Question 9

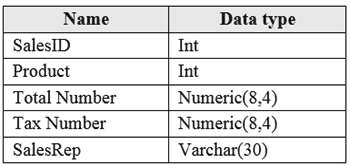

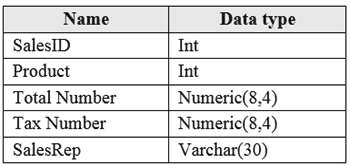

You have an Azure SQL database that contains a table named factSales. FactSales contains the columns shown in the following table.

FactSales has 6 billion rows and is loaded nightly by using a batch process. You must provide the greatest reduction in space for the database and maximize performance.

Which type of compression provides the greatest space reduction for the database?

- page compression

- row compression

- columnstore compression

- colum nstore archival compression

Correct answer: D

Explanation:

Columnstore tables and indexes are always stored with columnstore compression. You can further reduce the size of columnstore data by configuring an additional compression called archival compression. Note:Columnstore—Thecolumnstoreindexisalsologicallyorganizedasatablewithrowsandcolumns,butthedataisphysicallystoredinacolumn-wisedata format. Incorrect Answers: B: Rowstore — The rowstore index is the traditional style that has been around since the initial release of SQL Server. For rowstore tables and indexes, use the data compression feature to help reduce the size of the database. Reference: https://docs.microsoft.com/en-us/sql/relational-databases/data-compression/data-compression Columnstore tables and indexes are always stored with columnstore compression. You can further reduce the size of columnstore data by configuring an additional compression called archival compression.

Note:Columnstore—Thecolumnstoreindexisalsologicallyorganizedasatablewithrowsandcolumns,butthedataisphysicallystoredinacolumn-wisedata format.

Incorrect Answers:

B: Rowstore — The rowstore index is the traditional style that has been around since the initial release of SQL

Server. For rowstore tables and indexes, use the data compression feature to help reduce the size of the database.

Reference:

https://docs.microsoft.com/en-us/sql/relational-databases/data-compression/data-compression

Question 10

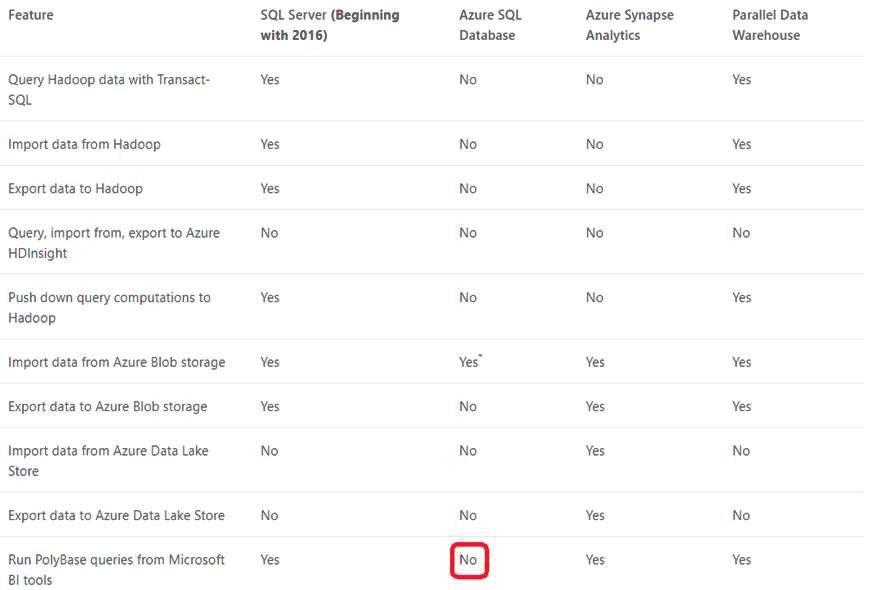

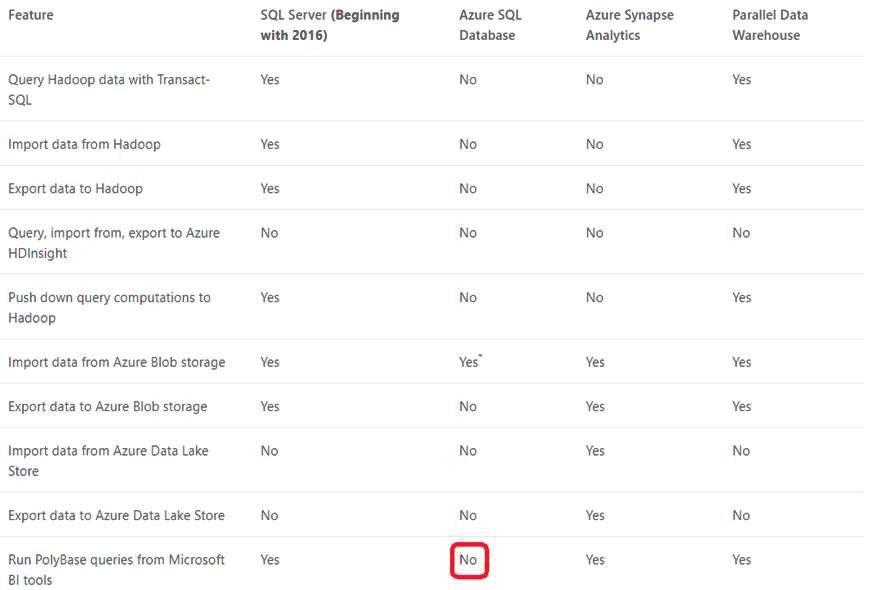

You have a Microsoft SQL Server 2019 database named DB1 that uses the following database-level and instance-level features.

- Clustered columnstore indexes

- Automatic tuning

- Change tracking

- PolyBase

You plan to migrate DB1 to an Azure SQL database.

What feature should be removed or replaced before DB1 can be migrated?

- Clustered columnstoreindexes

- PolyBase

- Changetracking

- Automatictuning

Correct answer: B

Explanation:

This table lists the key features for PolyBase and the products in which they're available. Incorrect Answers: C: Change tracking is a lightweight solution that provides an efficient change tracking mechanism for applications. It applies to both Azure SQL Database and SQL Server. D: Azure SQL Database and Azure SQL Managed Instance automatic tuning provides peak performance and stable workloads through continuous performance tuning based on AI and machine learning. Reference: https://docs.microsoft.com/en-us/sql/relational-databases/polybase/polybase-versioned-feature-summary This table lists the key features for PolyBase and the products in which they're available.

Incorrect Answers:

C: Change tracking is a lightweight solution that provides an efficient change tracking mechanism for applications. It applies to both Azure SQL Database and SQL Server.

D: Azure SQL Database and Azure SQL Managed Instance automatic tuning provides peak performance and stable workloads through continuous performance tuning based on AI and machine learning.

Reference:

https://docs.microsoft.com/en-us/sql/relational-databases/polybase/polybase-versioned-feature-summary