File Info

| Exam | MuleSoft Certified Integration Architect-Level 1 MAINTENANCE |

| Number | MCIA-LEVEL-1-MAINTENANCE |

| File Name | Mulesoft.MCIA-LEVEL-1-MAINTENANCE.VCEplus.2024-11-09.116q.tqb |

| Size | 3 MB |

| Posted | Nov 09, 2024 |

| Download | Mulesoft.MCIA-LEVEL-1-MAINTENANCE.VCEplus.2024-11-09.116q.tqb |

How to open VCEX & EXAM Files?

Files with VCEX & EXAM extensions can be opened by ProfExam Simulator.

Coupon: MASTEREXAM

With discount: 20%

Demo Questions

Question 1

An organization is designing a mule application to support an all or nothing transaction between serval database operations and some other connectors so that they all roll back if there is a problem with any of the connectors Besides the database connector , what other connector can be used in the transaction.

- VM

- Anypoint MQ

- SFTP

- ObjectStore

Correct answer: A

Explanation:

Correct answer is VM VM support Transactional Type. When an exception occur, The transaction rolls back to its original state for reprocessing. This feature is not supported by other connectors.Here is additional information about Transaction management: Correct answer is VM VM support Transactional Type. When an exception occur, The transaction rolls back to its original state for reprocessing. This feature is not supported by other connectors.

Here is additional information about Transaction management:

Question 2

A mule application uses an HTTP request operation to involve an external API.

The external API follows the HTTP specification for proper status code usage.

What is possible cause when a 3xx status code is returned to the HTTP Request operation from theexternal API?

- The request was not accepted by the external API

- The request was Redirected to a different URL by the external API

- The request was NOT RECEIVED by the external API

- The request was ACCEPTED by the external API

Correct answer: B

Explanation:

3xx HTTP status codes indicate a redirection that the user agent (a web browser or a crawler) needsto take further action when trying to access a particular resource.Reference: https://www.w3.org/Protocols/rfc2616/rfc2616-sec10.html 3xx HTTP status codes indicate a redirection that the user agent (a web browser or a crawler) needsto take further action when trying to access a particular resource.

Reference: https://www.w3.org/Protocols/rfc2616/rfc2616-sec10.html

Question 3

An organization is migrating all its Mule applications to Runtime Fabric (RTF). None of the Mule applications use Mule domain projects.

Currently, all the Mule applications have been manually deployed to a server group among several customer hosted Mule runtimes.

Port conflicts between these Mule application deployments are currently managed by the DevOps team who carefully manage Mule application properties files.

When the Mule applications are migrated from the current customer-hosted server group to Runtime Fabric (RTF), fo the Mule applications need to be rewritten and what DevOps port configuration responsibilities change or stay the same?

- Yes, the Mule applications Must be rewrittenDevOps No Longer needs to manage port conflicts between the Mule applications

- Yes, the Mule applications Must be rewrittenDevOps Must Still Manage port conflicts.

- NO, The Mule applications do NOT need to be rewrittenDevOps MUST STILL manage port conflicts

- NO, the Mule applications do NO need to be rewrittenDevOps NO LONGER needs to manage port conflicts between the Mule applications.

Correct answer: C

Explanation:

Anypoint Runtime Fabric is a container service that automates the deployment and orchestration of your Mule applications and gateways.Runtime Fabric runs on customer-managed infrastructure on AWS, Azure, virtual machines (VMs) or bare-metal servers.As none of the Mule applications use Mule domain projects. applications are not required to be rewritten. Also when applications are deployed on RTF, by default ingress is allowed only on 8081.Hence port conflicts are not required to be managed by DevOps team - Anypoint Runtime Fabric is a container service that automates the deployment and orchestration of your Mule applications and gateways.

- Runtime Fabric runs on customer-managed infrastructure on AWS, Azure, virtual machines (VMs) or bare-metal servers.

- As none of the Mule applications use Mule domain projects. applications are not required to be rewritten. Also when applications are deployed on RTF, by default ingress is allowed only on 8081.

- Hence port conflicts are not required to be managed by DevOps team

Question 4

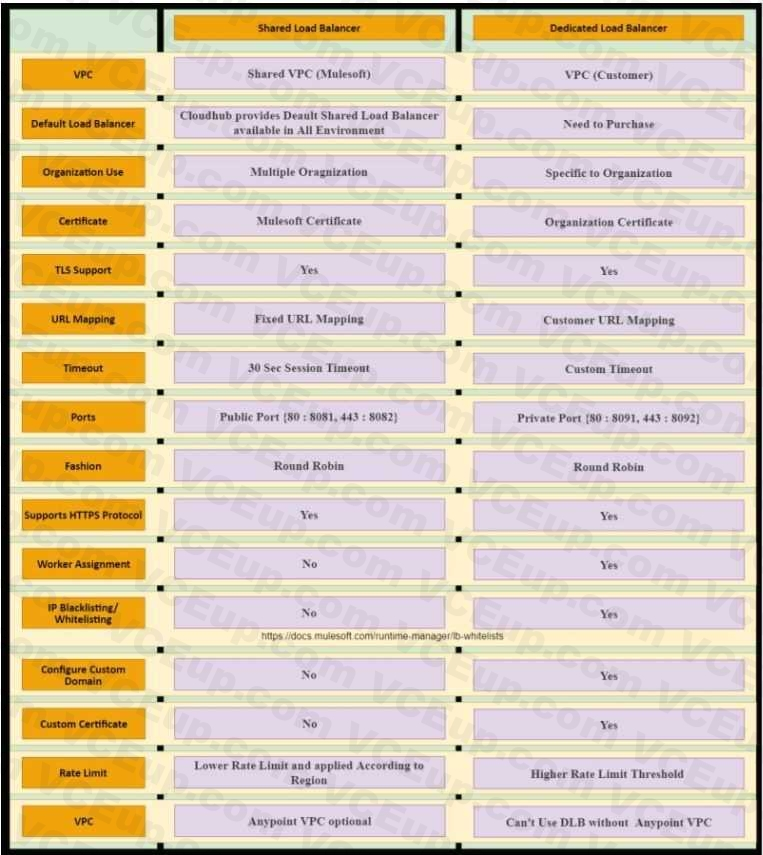

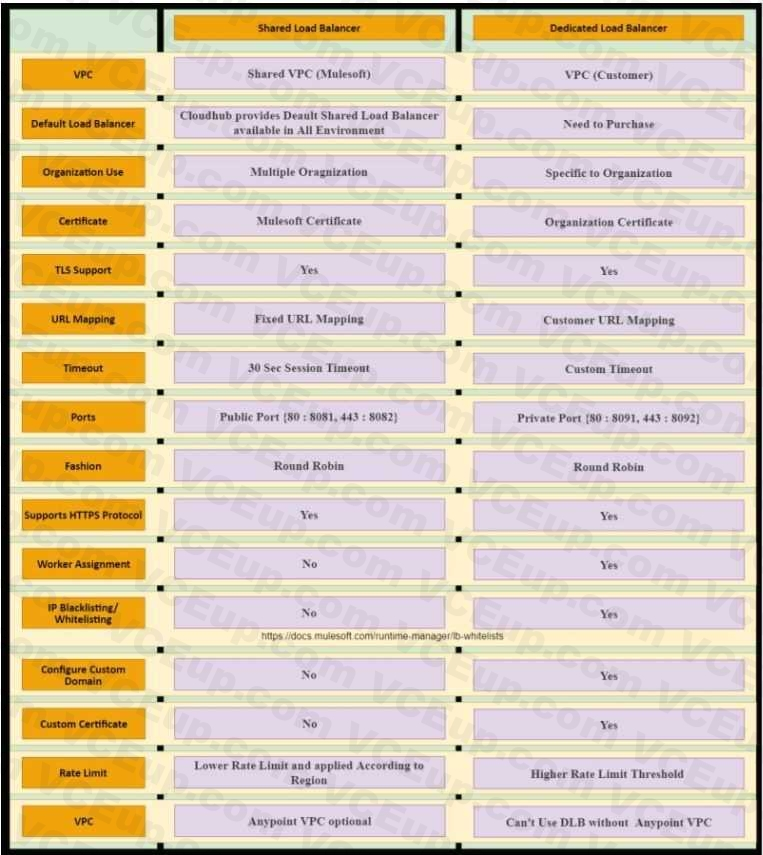

An organization is evaluating using the CloudHub shared Load Balancer (SLB) vs creating a CloudHub dedicated load balancer (DLB). They are evaluating how this choice affects the various types of certificates used by CloudHub deplpoyed Mule applications, including MuleSoft-provided, customerprovided, or Mule application-provided certificates.

What type of restrictions exist on the types of certificates that can be exposed by the CloudHub Shared Load Balancer (SLB) to external web clients over the public internet?

- Only MuleSoft-provided certificates are exposed.

- Only customer-provided wildcard certificates are exposed.

- Only customer-provided self-signed certificates are exposed.

- Only underlying Mule application certificates are exposed (pass-through)

Correct answer: A

Explanation:

https://docs.mulesoft.com/runtime-manager/dedicated-load-balancer-tutorial https://docs.mulesoft.com/runtime-manager/dedicated-load-balancer-tutorial

Question 5

A Mule application is being designed To receive nightly a CSV file containing millions of records from an external vendor over SFTP, The records from the file need to be validated, transformed. And then written to a database.

Records can be inserted into the database in any order.

In this use case, what combination of Mule components provides the most effective and performant way to write these records to the database?

- Use a Parallel for Each scope to Insert records one by one into the database

- Use a Scatter-Gather to bulk insert records into the database

- Use a Batch job scope to bulk insert records into the database.

- Use a DataWeave map operation and an Async scope to insert records one by one into the database.

Correct answer: C

Explanation:

Correct answer is Use a Batch job scope to bulk insert records into the database * Batch Job is most efficient way to manage millions of records.A few points to note here are as follows :Reliability: If you want reliabilty while processing the records, i.e should the processing survive a runtime crash or other unhappy scenarios, and when restarted process all the remaining records, if yes then go for batch as it uses persistent queues.Error Handling: In Parallel for each an error in a particular route will stop processing the remaining records in that route and in such case you'd need to handle it using on error continue, batch process does not stop during such error instead you can have a step for failures and have a dedicated handling in it.Memory footprint: Since question said that there are millions of records to process, parallel for each will aggregate all the processed records at the end and can possibly cause Out Of Memory.Batch job instead provides a BatchResult in the on complete phase where you can get the count of failures and success. For huge file processing if order is not a concern definitely go ahead with Batch Job Correct answer is Use a Batch job scope to bulk insert records into the database * Batch Job is most efficient way to manage millions of records.

A few points to note here are as follows :

Reliability: If you want reliabilty while processing the records, i.e should the processing survive a runtime crash or other unhappy scenarios, and when restarted process all the remaining records, if yes then go for batch as it uses persistent queues.

Error Handling: In Parallel for each an error in a particular route will stop processing the remaining records in that route and in such case you'd need to handle it using on error continue, batch process does not stop during such error instead you can have a step for failures and have a dedicated handling in it.

Memory footprint: Since question said that there are millions of records to process, parallel for each will aggregate all the processed records at the end and can possibly cause Out Of Memory.

Batch job instead provides a BatchResult in the on complete phase where you can get the count of failures and success. For huge file processing if order is not a concern definitely go ahead with Batch Job

Question 6

An automation engineer needs to write scripts to automate the steps of the API lifecycle, including steps to create, publish, deploy and manage APIs and their implementations in Anypoint Platform.

What Anypoint Platform feature can be used to automate the execution of all these actions in scripts in the easiest way without needing to directly invoke the Anypoint Platform REST APIs?

- Automated Policies in API Manager

- Runtime Manager agent

- The Mule Maven Plugin

- Anypoint CLI

Correct answer: D

Explanation:

Anypoint Platform provides a scripting and command-line tool for both Anypoint Platform and Anypoint Platform Private Cloud Edition (Anypoint Platform PCE). The command-line interface (CLI) supports both the interactive shell and standard CLI modes and works with: Anypoint Exchange Access management Anypoint Runtime Manager Anypoint Platform provides a scripting and command-line tool for both Anypoint Platform and Anypoint Platform Private Cloud Edition (Anypoint Platform PCE). The command-line interface (CLI) supports both the interactive shell and standard CLI modes and works with: Anypoint Exchange Access management Anypoint Runtime Manager

Question 7

A company wants its users to log in to Anypoint Platform using the company's own internal user credentials. To achieve this, the company needs to integrate an external identity provider (IdP) with the company's Anypoint Platform master organization, but SAML 2.0 CANNOT be used. Besides SAML 2.0, what single-sign-on standard can the company use to integrate the IdP with their Anypoint Platform master organization?

- SAML 1.0

- OAuth 2.0

- Basic Authentication

- OpenID Connect

Correct answer: D

Explanation:

As the Anypoint Platform organization administrator, you can configure identity management in Anypoint Platform to set up users for single sign-on (SSO).Configure identity management using one of the following single sign-on standards:1) OpenID Connect: End user identity verification by an authorization server including SSO2) SAML 2.0: Web-based authorization including cross-domain SSO As the Anypoint Platform organization administrator, you can configure identity management in Anypoint Platform to set up users for single sign-on (SSO).

Configure identity management using one of the following single sign-on standards:

1) OpenID Connect: End user identity verification by an authorization server including SSO

2) SAML 2.0: Web-based authorization including cross-domain SSO

Question 8

An API implementation is being developed to expose data from a production database via HTTPrequests. The API implementation executes a database SELECT statement that is dynamically createdbased upon data received from each incoming HTTP request. The developers are planning to usevarious types of testing to make sure the Mule application works as expected, can handle specificworkloads, and behaves correctly from an API consumer perspective. What type of testing wouldtypically mock the results from each SELECT statement rather than actually execute it in theproduction database?

- Unit testing (white box)

- Integration testing

- Functional testing (black box)

- Performance testing

Correct answer: A

Explanation:

In Unit testing instead of using actual backends, stubs are used for the backend services. This ensures that developers are not blocked and have no dependency on other systems.In Unit testing instead of using actual backends, stubs are used for the backend services. This ensures that developers are not blocked and have no dependency on other systems.Below are the typical characteristics of unit testing.-- Unit tests do not require deployment into any special environment, such as a staging environment -- Unit tests san be run from within an embedded Mule runtime -- Unit tests can/should be implemented using MUnit -- For read-only interactions to any dependencies (such as other APIs): allowed to invoke production endpoints -- For write interactions: developers must implement mocks using MUnit -- Require knowledge of the implementation details of the API implementation under test In Unit testing instead of using actual backends, stubs are used for the backend services. This ensures that developers are not blocked and have no dependency on other systems.

In Unit testing instead of using actual backends, stubs are used for the backend services. This ensures that developers are not blocked and have no dependency on other systems.

Below are the typical characteristics of unit testing.

-- Unit tests do not require deployment into any special environment, such as a staging environment

-- Unit tests san be run from within an embedded Mule runtime

-- Unit tests can/should be implemented using MUnit

-- For read-only interactions to any dependencies (such as other APIs): allowed to invoke production endpoints

-- For write interactions: developers must implement mocks using MUnit

-- Require knowledge of the implementation details of the API implementation under test

Question 9

A travel company wants to publish a well-defined booking service API to be shared with its business partners. These business partners have agreed to ONLY consume SOAP services and they want to get the service contracts in an easily consumable way before they start any development. The travel company will publish the initial design documents to Anypoint Exchange, then share those documents with the business partners. When using an API-led approach, what is the first design document the travel company should deliver to its business partners?

- Create a WSDL specification using any XML editor

- Create a RAML API specification using any text editor

- Create an OAS API specification in Design Center

- Create a SOAP API specification in Design Center

Correct answer: A

Explanation:

SOAP API specifications are provided as WSDL. Design center doesn't provide the functionality to create WSDL file. Hence WSDL needs to be created using XML editor SOAP API specifications are provided as WSDL. Design center doesn't provide the functionality to create WSDL file. Hence WSDL needs to be created using XML editor

Question 10

What is not true about Mule Domain Project?

- This allows Mule applications to share resources

- Expose multiple services within the Mule domain on the same port

- Only available Anypoint Runtime Fabric

- Send events (messages) to other Mule applications using VM queues

Correct answer: C

Explanation:

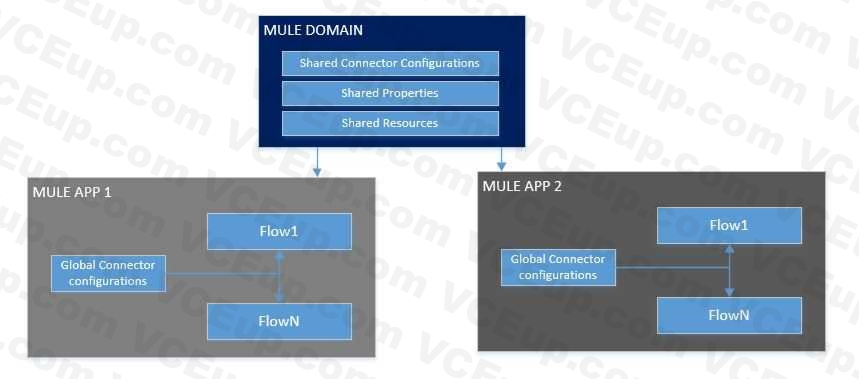

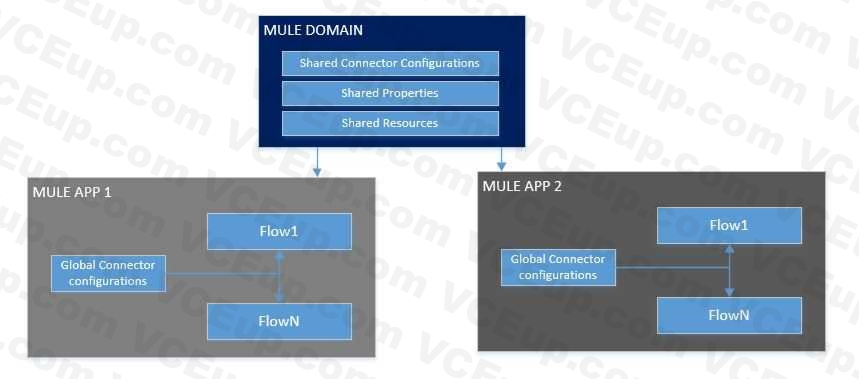

* Mule Domain Project is ONLY available for customer-hosted Mule runtimes, but not for Anypoint Runtime Fabric * Mule domain project is available for Hybrid and Private Cloud (PCE). Rest all provide application isolation and can't support domain project.What is Mule Domain Project?* A Mule Domain Project is implemented to configure the resources that are shared among different projects. These resources can be used by all the projects associated with this domain. Mule applications can be associated with only one domain, but a domain can be associated with multiple projects. Shared resources allow multiple development teams to work in parallel using the same set of reusable connectors. Defining these connectors as shared resources at the domain level allows the team to: - Expose multiple services within the domain through the same port. - Share the connection to persistent storage. - Share services between apps through a well-defined interface. - Ensure consistency between apps upon any changes because the configuration is only set in one place.* Use domains Project to share the same host and port among multiple projects. You can declare the http connector within a domain project and associate the domain project with other projects. Doing this also allows to control thread settings, keystore configurations, time outs for all the requests made within multiple applications. You may think that one can also achieve this by duplicating the http connector configuration across all the applications. But, doing this may pose a nightmare if you have to make a change and redeploy all the applications.* If you use connector configuration in the domain and let all the applications use the new domain instead of a default domain, you will maintain only one copy of the http connector configuration. Any changes will require only the domain to the redeployed instead of all the applications.You can start using domains in only three steps:1) Create a Mule Domain project2) Create the global connector configurations which needs to be shared across the applications inside the Mule Domain project3) Modify the value of domain in mule-deploy.properties file of the applications * Mule Domain Project is ONLY available for customer-hosted Mule runtimes, but not for Anypoint Runtime Fabric * Mule domain project is available for Hybrid and Private Cloud (PCE). Rest all provide application isolation and can't support domain project.

What is Mule Domain Project?

* A Mule Domain Project is implemented to configure the resources that are shared among different projects. These resources can be used by all the projects associated with this domain. Mule applications can be associated with only one domain, but a domain can be associated with multiple projects. Shared resources allow multiple development teams to work in parallel using the same set of reusable connectors. Defining these connectors as shared resources at the domain level allows the team to: - Expose multiple services within the domain through the same port. - Share the connection to persistent storage. - Share services between apps through a well-defined interface. - Ensure consistency between apps upon any changes because the configuration is only set in one place.

* Use domains Project to share the same host and port among multiple projects. You can declare the http connector within a domain project and associate the domain project with other projects. Doing this also allows to control thread settings, keystore configurations, time outs for all the requests made within multiple applications. You may think that one can also achieve this by duplicating the http connector configuration across all the applications. But, doing this may pose a nightmare if you have to make a change and redeploy all the applications.

* If you use connector configuration in the domain and let all the applications use the new domain instead of a default domain, you will maintain only one copy of the http connector configuration. Any changes will require only the domain to the redeployed instead of all the applications.

You can start using domains in only three steps:

1) Create a Mule Domain project

2) Create the global connector configurations which needs to be shared across the applications inside the Mule Domain project

3) Modify the value of domain in mule-deploy.properties file of the applications