File Info

| Exam | Upgrade to Oracle Database 12c |

| Number | 1z0-060 |

| File Name | Oracle.1z0-060.RealExams.2018-10-04.114q.vcex |

| Size | 1 MB |

| Posted | Oct 04, 2018 |

| Download | Oracle.1z0-060.RealExams.2018-10-04.114q.vcex |

How to open VCEX & EXAM Files?

Files with VCEX & EXAM extensions can be opened by ProfExam Simulator.

Coupon: MASTEREXAM

With discount: 20%

Demo Questions

Question 1

Your database is running an ARCHIVELOG mode.

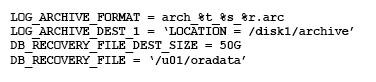

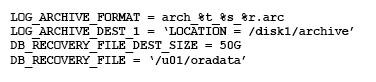

The following parameters are set in your database instance:

Which statement is true about the archived redo log files?

- They are created only in the location specified by the LOG_ARCHIVE_DEST_1 parameter.

- They are created only in the Fast Recovery Area because configuring the DB_RECOVERY_FILE_DEST and DB_RECOVERY_FILE_DEST_SIZE parameters automatically enables flashback for the database.

- They are created in the location specified by the LOG_ARCHIVE_DEST_1 parameter and in the default location $ORACLE_HOME/dbs/arch.

- They are created in the location specified by the LOG_ARCHIVE_DEST_1 parameter and in the location specified by the DB_RECOVERY_FILE_DEST parameter.

Correct answer: A

Explanation:

You can choose to archive redo logs to a single destination or to multiple destinations. Destinations can be local—within the local file system or an Oracle Automatic Storage Management (Oracle ASM) disk group—or remote (on a standby database). When you archive to multiple destinations, a copy of each filled redo log file is written to each destination. These redundant copies help ensure that archived logs are always available in the event of a failure at one of the destinations. To archive to only a single destination, specify that destination using the LOG_ARCHIVE_DEST and LOG_ARCHIVE_DUPLEX_DEST initialization parameters. ARCHIVE_DEST initialization parameter. To archive to multiple destinations, you can choose to archive to two or more locations using the LOG_ARCHIVE_DEST_n initialization parameters, or to archive only to a primary and secondary destination using the LOG_ARCHIVE_DEST and LOG_ARCHIVE_DUPLEX_DEST initialization parameters. You can choose to archive redo logs to a single destination or to multiple destinations.

Destinations can be local—within the local file system or an Oracle Automatic Storage Management (Oracle ASM) disk group—or remote (on a standby database). When you archive to multiple destinations, a copy of each filled redo log file is written to each destination. These redundant copies help ensure that archived logs are always available in the event of a failure at one of the destinations.

To archive to only a single destination, specify that destination using the LOG_ARCHIVE_DEST and LOG_ARCHIVE_DUPLEX_DEST initialization parameters.

ARCHIVE_DEST initialization parameter. To archive to multiple destinations, you can choose to archive to two or more locations using the LOG_ARCHIVE_DEST_n initialization parameters, or to archive only to a primary and secondary destination using the LOG_ARCHIVE_DEST and LOG_ARCHIVE_DUPLEX_DEST initialization parameters.

Question 2

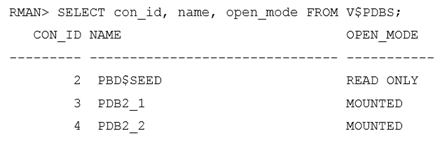

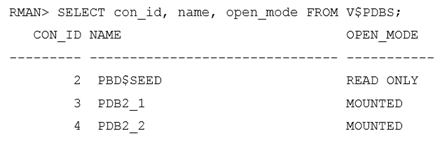

Your multitenant container database (CDB) is running in ARCHIVELOG mode. You connect to the CDB RMAN.

Examine the following command and its output:

You execute the following command:

RMAN > BACKUP DATABASE PLUS ARCHIVELOG;

Which data files will be backed up?

- Data files that belong to only the root container

- Data files that belong to the root container and all the pluggable databases (PDBs)

- Data files that belong to only the root container and PDB$SEED

- Data files that belong to the root container and all the PDBs excluding PDB$SEED

Correct answer: B

Explanation:

Backing Up a Whole CDB Backing up a whole CDB is similar to backing up a non-CDB. When you back up a whole CDB, RMAN backs up the root, all the PDBs, and the archived redo logs. You can then recover either the whole CDB, the root only, or one or more PDBs from the CDB backup. Note:You can back up and recover a whole CDB, the root only, or one or more PDBs. Backing Up Archived Redo Logs with RMAN Archived redo logs are the key to successful media recovery. Back them up regularly. You can back up logs with BACKUP ARCHIVELOG, or back up logs while backing up datafiles and control files by specifying BACKUP ... PLUS ARCHIVELOG. Backing Up a Whole CDB

Backing up a whole CDB is similar to backing up a non-CDB. When you back up a whole CDB, RMAN backs up the root, all the PDBs, and the archived redo logs. You can then recover either the whole CDB, the root only, or one or more PDBs from the CDB backup.

Note:

- You can back up and recover a whole CDB, the root only, or one or more PDBs.

- Backing Up Archived Redo Logs with RMAN

Archived redo logs are the key to successful media recovery. Back them up regularly. You can back up logs with BACKUP ARCHIVELOG, or back up logs while backing up datafiles and control files by specifying BACKUP ... PLUS ARCHIVELOG.

Question 3

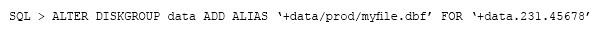

You are administering a database stored in Automatic Storage management (ASM). The files are stored in the DATA disk group. You execute the following command:

What is the result?

- The file ‘+data.231.45678’ is physically relocated to ‘+data/prod’ and renamed as ‘myfile.dbf’.

- The file ‘+data.231.45678’ is renamed as ‘myfile.dbf’, and copied to ‘+data/prod’.

- The file ‘+data.231.45678’ remains in the same location and a synonym 'myfile.dbf' is created.

- The file ‘myfile.dbf’ is created in ‘+data/prod’ and the reference to ‘+data.231.45678’ in the data dictionary removed.

Correct answer: C

Explanation:

ADD ALIAS Use this clause to create an alias name for an Oracle ASM filename. The alias_name consists of the full directory path and the alias itself. ADD ALIAS

Use this clause to create an alias name for an Oracle ASM filename. The alias_name consists of the full directory path and the alias itself.

Question 4

Which three functions are performed by the SQL Tuning Advisor?

- Building and implementing SQL profiles

- Recommending the optimization of materialized views

- Checking query objects for missing and stale statistics

- Recommending bitmap, function-based, and B-tree indexes

- Recommending the restructuring of SQL queries that are using bad plans

Correct answer: ACE

Explanation:

The SQL Tuning Advisor takes one or more SQL statements as an input and invokes the Automatic Tuning Optimizer to perform SQL tuning on the statements. The output of the SQL Tuning Advisor is in the form of an advice or recommendations, along with a rationale for each recommendation and its expected benefit. The recommendation relates to collection of statistics on objects (C), creation of new indexes, restructuring of the SQL statement (E), or creation of a SQL profile (A). You can choose to accept the recommendation to complete the tuning of the SQL statements. The SQL Tuning Advisor takes one or more SQL statements as an input and invokes the Automatic Tuning Optimizer to perform SQL tuning on the statements. The output of the SQL Tuning Advisor is in the form of an advice or recommendations, along with a rationale for each recommendation and its expected benefit. The recommendation relates to collection of statistics on objects (C), creation of new indexes, restructuring of the SQL statement (E), or creation of a SQL profile (A). You can choose to accept the recommendation to complete the tuning of the SQL statements.

Question 5

Examine the following command:

ALTER SYSTEM SET enable_ddl_logging=FALSE;

Which statement is true?

- None of the data definition language (DDL) statements are logged in the trace file.

- Only DDL commands that resulted in errors are logged in the alert log file.

- A new log.xml file that contains the DDL statements is created, and the DDL command details are removed from the alert log file.

- Only DDL commands that resulted in the creation of new database files are logged.

Correct answer: A

Explanation:

ENABLE_DDL_LOGGING enables or disables the writing of a subset of data definition language (DDL) statements to a DDL alert log. The DDL log is a file that has the same format and basic behavior as the alert log, but it only contains the DDL statements issued by the database. The DDL log is created only for the RDBMS component and only if the ENABLE_DDL_LOGGING initialization parameter is set to true. When this parameter is set to false, DDL statements are not included in any log. ENABLE_DDL_LOGGING enables or disables the writing of a subset of data definition language (DDL) statements to a DDL alert log.

The DDL log is a file that has the same format and basic behavior as the alert log, but it only contains the DDL statements issued by the database. The DDL log is created only for the RDBMS component and only if the ENABLE_DDL_LOGGING initialization parameter is set to true. When this parameter is set to false, DDL statements are not included in any log.

Question 6

Your multitenant container database (CDB) contains three pluggable database (PDBs). You find that the control file is damaged. You plan to use RMAN to recover the control file. There are no startup triggers associated with the PDBs.

Which three steps should you perform to recover the control file and make the database fully operational? (Choose three.)

- Mount the container database (CDB) and restore the control file from the control file autobackup.

- Recover and open the CDB in NORMAL mode.

- Mount the CDB and then recover and open the database, with the RESETLOGS option.

- Open all the pluggable databases.

- Recover each pluggable database.

- Start the database instance in the nomount stage and restore the control file from control file autobackup.

Correct answer: CDF

Explanation:

Step 1: Start the database instance in the nomount stage and restore the control file from control file auto backupStep 2: Open all the pluggable databases.Step 3: If all copies of the current control file are lost or damaged, then you must restore and mount a backup control file. You must then run the RECOVER command, even if no data files have been restored, and open the database with the RESETLOGS option.Note:RMAN and Oracle Enterprise Manager Cloud Control (Cloud Control) provide full support for backup and recovery in a multitenant environment. You can back up and recover a whole multitenant container database (CDB), root only, or one or more pluggable databases (PDBs). Step 1: Start the database instance in the nomount stage and restore the control file from control file auto backup

Step 2: Open all the pluggable databases.

Step 3: If all copies of the current control file are lost or damaged, then you must restore and mount a backup control file. You must then run the RECOVER command, even if no data files have been restored, and open the database with the RESETLOGS option.

Note:

- RMAN and Oracle Enterprise Manager Cloud Control (Cloud Control) provide full support for backup and recovery in a multitenant environment. You can back up and recover a whole multitenant container database (CDB), root only, or one or more pluggable databases (PDBs).

Question 7

A new report process containing a complex query is written, with high impact on the database. You want to collect basic statistics about query, such as the level of parallelism, total database time, and the number of I/O requests.

For the database instance STATISTICS_LEVEL, the initialization parameter is set to TYPICAL and the CONTROL_MANAGEMENT_PACK_ACCESS parameter is set to DIAGNOSTIC+TUNING.

What should you do to accomplish this task?

- Execute the query and view Active Session History (ASH) for information about the query.

- Enable SQL trace for the query.

- Create a database operation, execute the query, and use the DBMS_SQL_MONITOR.REPORT_SQL_MONITOR function to view the report.

- Use the DBMS_APPLICATION_INFO.SET_SESSION_LONGOPS procedure to monitor query execution and view the information from the V$SESSION_LONGOPS view.

Correct answer: C

Explanation:

The REPORT_SQL_MONITOR function is used to return a SQL monitoring report for a specific SQL statement. Incorrect Answers:A: Not interested in session statistics, only in statistics for the particular SQL query.B: We are interested in statistics, not tracing.D: SET_SESSION_LONGOPS ProcedureThis procedure sets a row in the V$SESSION_LONGOPS view. This is a view that is used to indicate the on-going progress of a long running operation. Some Oracle functions, such as parallel execution and Server Managed Recovery, use rows in this view to indicate the status of, for example, a database backup. Applications may use the SET_SESSION_LONGOPS procedure to advertise information on the progress of application specific long running tasks so that the progress can be monitored by way of the V$SESSION_LONGOPS view. The REPORT_SQL_MONITOR function is used to return a SQL monitoring report for a specific SQL statement.

Incorrect Answers:

A: Not interested in session statistics, only in statistics for the particular SQL query.

B: We are interested in statistics, not tracing.

D: SET_SESSION_LONGOPS Procedure

This procedure sets a row in the V$SESSION_LONGOPS view. This is a view that is used to indicate the on-going progress of a long running operation. Some Oracle functions, such as parallel execution and Server Managed Recovery, use rows in this view to indicate the status of, for example, a database backup.

Applications may use the SET_SESSION_LONGOPS procedure to advertise information on the progress of application specific long running tasks so that the progress can be monitored by way of the V$SESSION_LONGOPS view.

Question 8

Identify two valid options for adding a pluggable database (PDB) to an existing multitenant container database (CDB). (Choose two.)

- Use the CREATE PLUGGABLE DATABASE statement to create a PDB using the files from the SEED.

- Use the CREATE DATABASE ... ENABLE PLUGGABLE DATABASE statement to provision a PDB by copying file from the SEED.

- Use the DBMS_PDB package to clone an existing PDB.

- Use the DBMS_PDB package to plug an Oracle 12c non-CDB database into an existing CDB.

- Use the DBMS_PDB package to plug an Oracle 11g Release 2 (11.2.0.3.0) non-CDB database into an existing CDB.

Correct answer: AD

Question 9

Your database supports a DSS workload that involves the execution of complex queries: Currently, the library cache contains the ideal workload for analysis. You want to analyze some of the queries for an application that are cached in the library cache.

What must you do to receive recommendations about the efficient use of indexes and materialized views to improve query performance?

- Create a SQL Tuning Set (STS) that contains the queries cached in the library cache and run the SQL Tuning Advisor (STA) on the workload captured in the STS.

- Run the Automatic Workload Repository (AWR) report.

- Create an STS that contains the queries cached in the library cache and run the SQL Performance Analyzer (SPA) on the workload captured in the STS.

- Create an STS that contains the queries cached in the library cache and run the SQL Access Advisor on the workload captured in the STS.

- Run the Automatic Database Diagnostic Monitor (ADDM).

Correct answer: D

Explanation:

* SQL Access Advisor is primarily responsible for making schema modification recommendations, such as adding or dropping indexes and materialized views. SQL Tuning Advisor makes other types of recommendations, such as creating SQL profiles and restructuring SQL statements. * The query optimizer can also help you tune SQL statements. By using SQL Tuning Advisor and SQL Access Advisor, you can invoke the query optimizer in advisory mode to examine a SQL statement or set of statements and determine how to improve their efficiency. SQL Tuning Advisor and SQL Access Advisor can make various recommendations, such as creating SQL profiles, restructuring SQL statements, creating additional indexes or materialized views, and refreshing optimizer statistics. Note:Decision support system (DSS) workload The library cache is a shared pool memory structure that stores executable SQL and PL/SQL code. This cache contains the shared SQL and PL/SQL areas and control structures such as locks and library cache handles. * SQL Access Advisor is primarily responsible for making schema modification recommendations, such as adding or dropping indexes and materialized views. SQL Tuning Advisor makes other types of recommendations, such as creating SQL profiles and restructuring SQL statements.

* The query optimizer can also help you tune SQL statements. By using SQL Tuning Advisor and SQL Access Advisor, you can invoke the query optimizer in advisory mode to examine a SQL statement or set of statements and determine how to improve their efficiency. SQL Tuning Advisor and SQL Access Advisor can make various recommendations, such as creating SQL profiles, restructuring SQL statements, creating additional indexes or materialized views, and refreshing optimizer statistics.

Note:

- Decision support system (DSS) workload

- The library cache is a shared pool memory structure that stores executable SQL and PL/SQL code. This cache contains the shared SQL and PL/SQL areas and control structures such as locks and library cache handles.

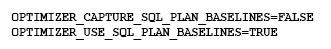

Question 10

The following parameters are set for your Oracle 12c database instance:

You want to manage the SQL plan evolution task manually. Examine the following steps:

- Set the evolve task parameters.

- Create the evolve task by using the DBMS_SPM.CREATE_EVOLVE_TASK function.

- Implement the recommendations in the task by using the DBMS_SPM.IMPLEMENT_EVOLVE_TASK function.

- Execute the evolve task by using the DBMS_SPM.EXECUTE_EVOLVE_TASK function.

- Report the task outcome by using the DBMS_SPM.REPORT_EVOLVE_TASK function.

Identify the correct sequence of steps:

- 2, 4, 5

- 2, 1, 4, 3, 5

- 1, 2, 3, 4, 5

- 1, 2, 4, 5

Correct answer: B

Explanation:

* Evolving SQL Plan Baselines 2. Create the evolve task by using the DBMS_SPM.CREATE_EVOLVE_TASK function. This function creates an advisor task to prepare the plan evolution of one or more plans for a specified SQL statement. The input parameters can be a SQL handle, plan name or a list of plan names, time limit, task name, and description. 1. Set the evolve task parameters. SET_EVOLVE_TASK_PARAMETER This function updates the value of an evolve task parameter. In this release, the only valid parameter is TIME_LIMIT. 4. Execute the evolve task by using the DBMS_SPM.EXECUTE_EVOLVE_TASK function. This function executes an evolution task. The input parameters can be the task name, execution name, and execution description. If not specified, the advisor generates the name, which is returned by the function. 3: IMPLEMENT_EVOLVE_TASKThis function implements all recommendations for an evolve task. Essentially, this function is equivalent to using ACCEPT_SQL_PLAN_BASELINE for all recommended plans. Input parameters include task name, plan name, owner name, and execution name. 5. Report the task outcome by using the DBMS_SPM_EVOLVE_TASK function. This function displays the results of an evolve task as a CLOB. Input parameters include the task name and section of the report to include. * Evolving SQL Plan Baselines

2. Create the evolve task by using the DBMS_SPM.CREATE_EVOLVE_TASK function.

This function creates an advisor task to prepare the plan evolution of one or more plans for a specified SQL statement. The input parameters can be a SQL handle, plan name or a list of plan names, time limit, task name, and description.

1. Set the evolve task parameters.

SET_EVOLVE_TASK_PARAMETER

This function updates the value of an evolve task parameter. In this release, the only valid parameter is TIME_LIMIT.

4. Execute the evolve task by using the DBMS_SPM.EXECUTE_EVOLVE_TASK function.

This function executes an evolution task. The input parameters can be the task name, execution name, and execution description. If not specified, the advisor generates the name, which is returned by the function.

3: IMPLEMENT_EVOLVE_TASK

This function implements all recommendations for an evolve task. Essentially, this function is equivalent to using ACCEPT_SQL_PLAN_BASELINE for all recommended plans. Input parameters include task name, plan name, owner name, and execution name.

5. Report the task outcome by using the DBMS_SPM_EVOLVE_TASK function.

This function displays the results of an evolve task as a CLOB. Input parameters include the task name and section of the report to include.